티스토리 뷰

Design a Notification Service / Usecase of Netflix's Push Server System

흑개1 2025. 5. 25. 00:43

알림 시스템 설계하기

요구 조건

- 알림 지원 형태: 푸시 알림, SMS 메시지, 이메일

- soft real-time 시스템; 가능한 빨리 전달되어야 하나 약간의 지연은 OK

- iOS 단말, 안드로이드 단말, 랩톱/데스크탑 지원

- 사용자가 알람을 받지 않도록 설정 가능

- 성능: 약 천만 건의 모바일 푸시 알림 보낼 수 있어야 함

시스템 구성

알림 메커니즘 동작 방식

- 알림 제공자: 알림 요청을 만들어 사용자 단말에 알림을 실제로 보내는 제3자 알림 서비스에 전송함. 제3자 알림 서비스에는 APNs(iOS), FCM(Android), Twilio(SMS) 등이 있음. 알림 요청을 만들려면 다음과 같은 데이터가 필요.

- 단말 토큰: 알람 요청 보내는데 필요한 고유 식별자

- payload: 알림 내용을 담은 JSON 딕셔너리

시스템 구조도

- 각 서비스: 마이크로서비스 or 크론잡 등의 서비스로 알림 시스템 서버의 API 를 통해 알림을 보낼 서버

- 알림 서버: 다음 기능을 제공

- 알림 전송 API: 각 마이크로서비스에게 알림 전송을 위한 API 제공

- Validation: 이메일 주소, 전화번호에 대한 기본적 검증 수행

- 데이터베이스 및 캐시 질의: 알림에 포함시킬 데이터를 가져옴 (e.g. 알림 템플릿, 사용자 정보, 단말 정보, 알림 설정 등)

- 알림 전송: 알림 데이터를 메시지 큐에 넣음

- 메시지 큐: 버퍼 역할, 알림의 종류 별로 메시지 큐 구성, 컴포넌트 간 decoupling

- 작업 서버: 메시지 큐에서 전송할 알림을 꺼내 제3자 서비스로 전송

- 제 3자 서비스: 실제 사용자의 단말로 알림을 전송

추가 고려사항

안정성

- 데이터 손실 방지: 작업 서버에서 third-party service 로 알림 전송 실패 시, 알림 데이터를 데이터베이스에 저장하고 재시도 매커니즘을 구현, 알림 로그를 데이터베이스에 유지하고 이를 재시도

- 알림 중복 전송 방지: 각 알림마다 이벤트 ID를 검사하여 이전에 전송한 내역이 있는 중복된 이벤트면 버리고, 그렇지 않으면 알림을 발송

기타 고려사항

- 알림 템플릿: 알림 메시지의 일부 변수만 바꾸면 지정한 형식에 맞춰 알림을 만들어 낼 수 있게 함. 알림 서버에 해당 내용을 DB나 캐시에 저장

- 알림 설정: 사용자의 알림 설정 내역 저장, 테이블에는 user_id, channel (알림 전송 채널), opt_in(알림 수신 여부 저장) 저장

- 전송률 제한, 재시도, 큐 모니터링

- 이벤트 추적: 데이터 분석 서비스를 통해 알림 이벤트를 추적

Wrap-up

- 각 서비스가 알림 게이트웨이로 알림 전송

- 게이트웨이는 알림을 하나씩 수신하거나 배치 단위로 수신할 수 있음

- 알림 게이트웨이는 알림 서비스로 알림을 전달, 알림 서비스에서는 유효성 검사, 형식 지정, 스케줄링 등이 실행. 템플릿을 이용해 메시지 형식을 정의 가능

- 알림 서비스는 라우터(메시지 큐)로 메시지를 전달

- 제3자 Channel 로 연결되어 알림을 전송

Use Case: Scaling Push Messaging for Millions of Devices @Netflix

Overview of the system

Push registry that keep metadata about user-to-server mappings.

Push Library is a interface that provide interface for sending notification that hides all the details sendMessage() call puts the mssage into a push message queue, by using queues the senders and receivers can be decoupled.

Message Processor is the component that reads from the queue and perform the actual delivery; It looks up the push registry to identify which user is connected to which server, and then deliver to Zuul Push Servers.

Zuul Push Servers register users to push registry with the mapping information between particular user and serveer, and then send the push message using Websockets / SSE which provides persistent and open connections with multiple devices.

Component

Zuul push server

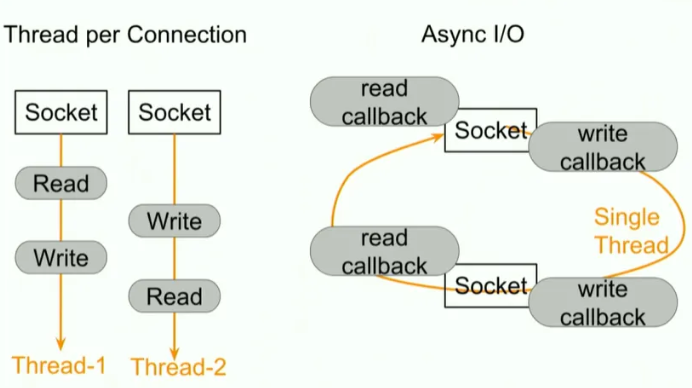

Zuul push servers must keep 10000+ persistent connection. How is it possible?

protected void addPushHandlers(ChannelPipeline pl) {

pl.addLast(new HttpServerCodec());

pl.addLast(new HttpObjectAggregator());

pl.addLast(getPushAuthHandler()); //custom auth handler

pl.addLast(new WebSocketServerCompressionHandler());

pl.addLast(new WebSocketServerProtocolHandler());

pl.addLast(getPushRegistrationHandler());

}use this async i/o programming model (all open connections to single thread) and netty

Push Registry

also, PushRegistrationHanlder(=handler for push registry) looks like this

public class MyRegistration extends PushRegistrationHandler {

@Override

protected void registerClient(

ChannelHandlerContext ctx,

PushUserAuth auth,

PushConnection conn,

PushConnectionRegistry registry) {

super.registerClient(ctx, authEvent, conn, registry);

ctx.executor().submit(() -> storeInRedis(auth));

}

}requirements for data store for push registry:

- low read latency (every push event is sent, the service would look up the push registry)

- record expiry (in case of the phantom connection to clients, it does clean such phantom registration records after certain timeout)

- sharding

- replications

Netflix use Dynomite (their own internal tools) which wraps around Redis and adds some features like auto-sharding, read/write quorum, cross-region replications

Message Processor

component to queue, route and deliver

queue

Netflix use kakfa for message queue

To ensure the success delivery of message, you can subscribe to push delivery status queue or read it DB table in a batch mode where all of push messages are logged

route

Also sender might have no idea which region that client might be connected, so message processing component take care of routing that message to the correct region on behalf of the senders By utilizing kafka, Netflix replicate messages in all the three regions so that they can actullay deliver them across the regions

processing the priority

To implement priority between messages, they use different queues for different prioritoies so priority inversion which means that message of higher priority is made to wait behind bunch of meessages of lower priority could never happen

how they handle large throughput?

They run multiple message processor instance in parallel to scale their message throughput;

Container management system(Mezzos) makes it easy for them to quickly start spin up a bunch of message processor instances when the message processing is falling behind

Critically, it automatically adjust the number of message processor according to number of messages which waits in the push message queue without any additional settings

Operating Notification Service

Thundering herd problems

The connection with client is stateful by using SSE or WebSocket; But when the quick deploy/rollback happens, all connections that connected to existing cluster are closed and they tried to reconnect to new cluster in a same time .. this makes thundering herd problems

it give rise to a big spike to the server and increase the traffic at that time so they implement some solutions to disperse these traffics generated by reconnection

- To limit the lifetime of single connection; so it auto-close after the certain amount of time, then client would try to reconnect to other server according to LB, so it could prevent stickiness to one server

- randomize the lifetime; so it can make the reconnection time can be dispersed

Currently, the server no longer closes connections directly. Instead, it asks the client to terminate the connection.

This design is based on how TCP handles connection teardown: the side that initiates the close enters TIME_WAIT state, which on Linux can hold file descriptor for up to 2 minutes. Since server-side file descriptor are more valuable (due to handling lage amount of concurrent connections), they shift this burden to client.

To handle unresponsive client, the server also includes timer; if the client doesn’t reply the request of closing within a certain timeout, the server forcefully terminates it

Optimize push server

one large & good server <<<<< more number of cheap & small servers

At the beginning, Netflix saw that most of connections are idle so usage of CPU and memory is low; so they chose one server which contains a tons of connections as long as the server can handle But that would make SOF, and again, thundering herd problem happened when the failure of the server was arosed.

So they used Goldilocks strategy which is using moderate spec of multiple servers. This allowed the system to remain resilient even if some servers failed.

how to auto-scale the servers?:

they use the number of open connections for metrics (most of connections are idle, so CPU doesn’t work. RPS(request per second) doesn’t work as well, because the conection is long-lived which means that there are no continuous requests) so they export that metrics to CloudWatch, AWS can make configuration about auto-scaling based on that custom metrics.

출처:

system-design-101/data/guides/how-does-netflix-scale-push-messaging-for-millions-of-devices.md at main · ByteByteGoHq/system-de

Explain complex systems using visuals and simple terms. Help you prepare for system design interviews. - ByteByteGoHq/system-design-101

github.com

system-design-101/data/guides/how-does-a-typical-push-notification-system-work.md at main · ByteByteGoHq/system-design-101

Explain complex systems using visuals and simple terms. Help you prepare for system design interviews. - ByteByteGoHq/system-design-101

github.com

https://www.infoq.com/presentations/neflix-push-messaging-scale/

Scaling Push Messaging for Millions of Devices @Netflix

Susheel Aroskar talks about Zuul Push, a scalable push notification service that handles millions of "always-on" persistent connections from all the Netflix apps running. He covers the design of the Zuul Push server and reviews the design details of the ba

www.infoq.com

참고로 이 위 링크의 영상은 꼭 보길 추천한다..

'공부 > System Architecture' 카테고리의 다른 글

| 실험 플랫폼 설계 (Experimentation Platform) (2) | 2025.07.13 |

|---|---|

| [System Design Interview] Chapter.13 검색어 자동 완성 시스템 (0) | 2025.06.08 |

| Domain Driven Design 개요 (1) | 2025.06.07 |

| [System Design Interview] Chapter.06 키-값 저장소 설계 (0) | 2025.03.01 |

| [System Design Interview] Chapter05. 안정 해시 설계 (0) | 2025.03.01 |